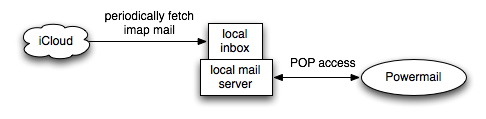

I opened Powermail and discovered that it did not receive my new iCloud mail. Apparently, iCloud does not provide POP access anymore. So I had two options:

- abandon an email client I’ve been using happily for ten years

- be creative

Naturally, I chose the second option and devised a cunning plan.

The idea is to periodically fetch emails from the iCloud imap server and store them in a local inbox, which can be accessed over POP using a local mail server. Software of choice: getmail for fetching, cron for periodical execution, dovecot as mail server.

In the remainder of this article, I briefly explain how to configure this. Familiarity with unix and the terminal is required.

Installation

It is not my intention to describe the software installation in detail, as this may depend on your specific system and preference. I installed getmail as described on their website and dovecot using macports. Cron is part of Mac OS X and any half-decent unix distribution.

Getmail

The configuration file for getmail is .getmail/getmailrc in your home directory. Replace the bold words with your settings.

[retriever]

type=SimpleIMAPSSLRetriever

server=imap.mail.me.com

port=993

username=user@me.com

password=password

mailboxes=("INBOX",)

[destination]

type=Mboxrd

path=/Users/username/.getmail/mbox

[options]

read_all=false

Once this configuration file is created, we can run the getmail command to check if it’s working. (This is a test run on my system, your output may vary.)

$ getmail getmail version 4.25.0 Copyright (C) 1998-2009 Charles Cazabon. Licensed under the GNU GPL version 2. SimpleIMAPSSLRetriever:user@me.com@imap.mail.me.com:993: 0 messages (0 bytes) retrieved, 168 skipped

Cron

To run the getmail command every 10 minutes, we need to install a cron job. Run crontab -e in the terminal and add the following line.

*/10 * * * * /usr/local/bin/getmail > /dev/null 2>&1

Dovecot

The dovecot configuration file resides in /opt/local/etc/dovecot/dovecot.conf when installed through macports. Mine looks as follows:

protocols = pop3

disable_plaintext_auth = yes

ssl = no

mail_location = mbox:/Users/username/.getmail:INBOX=/Users/username/.getmail/mbox

protocol pop3 {

listen = 127.0.0.1:11000

}

auth default {

mechanisms = plain

passdb passwd-file {

args = /Users/username/.getmail/dovecot-passwd

}

passdb pam {

}

userdb passwd {

}

user = root

}

Dovecot also needs a password file, we configured it to be located in .getmail/dovecot-passwd in your home directory. You can choose the local password, it is the password local clients need to use to access your local mailbox.

username:{plain}local-password::::/Users/username/.getmail::userdb_mail=mbox:~/mbox

Dovecot should be restarted after configuration.

Configuring your mail client

You can use your favorite POP3 email client now to access your local inbox.

- Incoming mail server: localhost

- Port: 11000

- Username: username

- Password: local-password